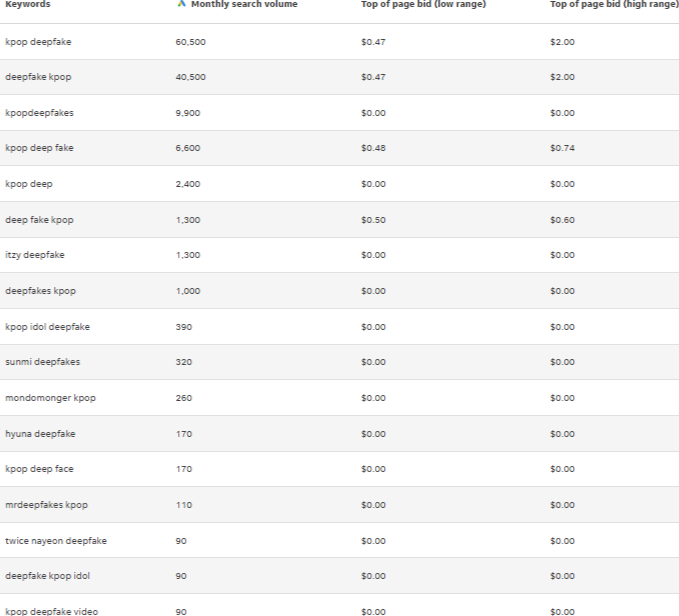

K-pop Deepfake! This is a Google search term with over 22,200 monthly searches. However, the data I am showing here is only for search queries in the USA. The search volumes increase as we collect more data from Europe and Asia. In fact, the per month searches for keywords like “K-pop Deepfake in South Korea are over 60,500. In this post, we’ll be looking over the growing threat of deepfake videos in the South Korean pop music industry.

But before we do that, let’s see what deepfake is, how it is created, and why people are targeting Kpop idols. In the most basic sense, deepfake videos are fake videos of celebrities and famous individuals created using specialized software and video editing skills. Some individuals and groups spread these fake videos of Kpop idols indulging in sexual intercourse on camera.

What is K-pop Deepfake?

According to The Guardian, “The 21st century’s answer to Photoshopping, deepfakes use a form of artificial intelligence called deep learning to make images of fake events, hence the name deepfake.” The technology is widely used in the political atmosphere, Hollywood movies, and adult videos.

The term “Deepfake” was first coined by a Reddit user named “Deepfake” in 2017. The user created a Subreddit for users to share and watch pornographic videos that used open-source face-swapping technology to create videos of popular celebrities doing indecent things on the camera. The technology gradually became popularized with other users, and a whole myriad of deepfake videos became a daily thing.

However, while a lot of these individuals were actually making funny videos and comic skits, some individuals went on to use the technology to spread hate and initiate war-like situations. Moreover, deepfake technology made its way into mainstream entertainment — Hollywood, Bollywood, K-drama, and K-pop.

According to a 2018 Vice report, a majority of deepfake videos target female celebrities with a shocking geographical ratio. The report stated that about 41% of deepfake subjects are British or American actresses and around 25% are of South Korean descent — mostly Kpop idols.

If we look at the data again, it shows the majority of searchers for Kpop deepfakes include searches for female Kpop idols. Furthermore, the information is for the USA only, and the data becomes more threatening if we try to change the location to South Korea.

Why does K-pop Deepfake exist?

A majority of K-pop deepfakes target sexualizing female Kpop idols. According to a report by AI firm Deeptrace, the number of deepfake videos in 2019 was around 15,000, with a ratio of 99% for adult content based on female celebrities.

There are various reasons why these videos exist on the internet and why they are being mass-produced. One of the prime reasons is spreading hate to the targets. Other causes include personal pleasure and curiosity.

In some cases, it has been seen that fans, who like a particular group in the Kpop industry, try to spread hate towards the rival groups. In that act of loathing, deepfakes were being used widely as weapons, according to Hye Jin Lee, Ph.D. clinical assistant professor at the University of Southern California University of the Annenberg School for Communication and Journalism.

Since Kpop has become a global phenomenon at this point with an estimated rise of $5 billion per year, underlying rules about Kpop idols not being open to speaking about their sexual life, or companies not allowing them to date, digs a curiosity among fans and haters.

Additionally, the South Korean government has banned all pornography websites, which can trigger anti-government entities to take revenge on the government by using deepfake videos of Kpop idols.

Who or what is behind K-pop Deepfake videos?

Is Asia (China & S.Korea) ahead with #deepfakes technology and applications? A few signs may sugges so. Western news & media haven't looked much into it yet. By Google Trends: pic.twitter.com/uUQ3IVq6oS

— gio (@GiorgioPatrini) July 29, 2019

The Founder and CEO of SensityAI, Henry Ajder, also spoke about K-pop Deepfake videos. In one of his Tweets in 2019, posting a video of a Taiwanese YouTuber using face-swapping technology, he said, “The Kpop #deepfakes phenomenon has always been an “early trend.” We first heard about it by concerned stakeholders back on April 19. It’s been mostly about face swaps in adult videos, but not only. See this clip from a Taiwanese youtube channel swapping Korean to Chinese VIPs:”

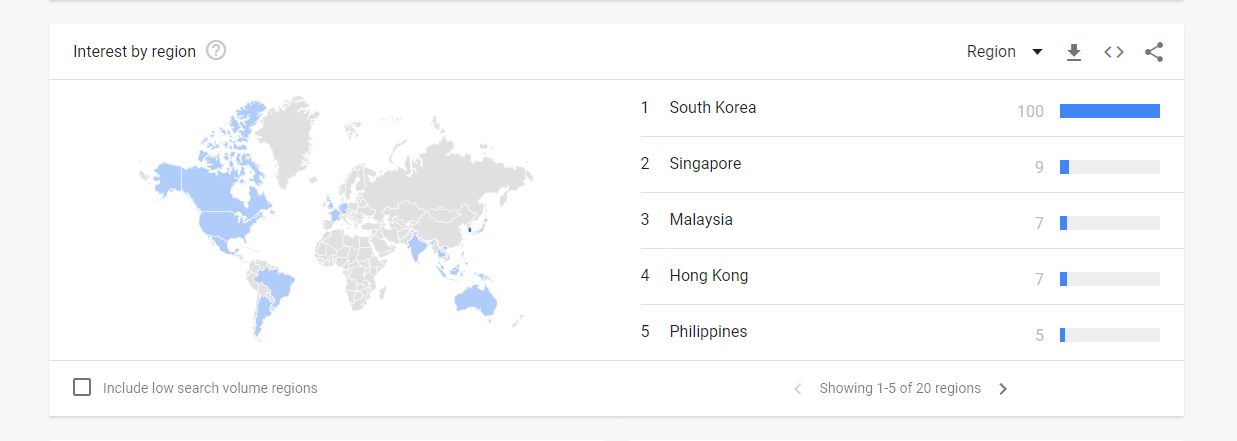

Interestingly, in the last five years, the searches for “Kpop Deepfake” have concentrated in the Asian region only — with the most significant being the percentage of 100% in South Korea only, followed by 9% in Singapore, 7% in Malaysia, 7% Hong Kong, 5 Philippines, 4 Thailand, 3% Vietnam, and 3% Taiwan.

The rest of the countries had a search value of 1% and less than 1%. However, what is more interesting about these searches is that China doesn’t even come under any percent of using the keyword “Kpop deepfake,” which spiked my interest since Chinese Internet users have access to VPN applications that connect to Google and other banned services in China.

The rise of Deepfake sites targeted toward Kpop idols

Countries in Asia, Europe, and America are already running programs to limit and stop the use of deepfake videos. In the growing concern for Kpop idols’ reputation, South Korea has issued strict laws against creating and distributing deepfake videos on adult websites.

However, new research shows that the people behind these videos have found a way to produce and share more deepfake videos without relying on third-party websites.

After the revision of the existing Korean ‘Act on Special Cases Concerning the Punishment, Etc. of Sex Crimes’, which includes a fine of 50 million Won ($40,500) and up to five years in prison, the offenders have created their own deepfake websites — with 99% of the content uploaded is only deepfake videos of celebrities — primarily female singers, dancers, actors, and public figures.

According to recent research, contrary to popular belief, most of these websites are not hosted on the deep or dark web. These deepfake websites are hosted at the surface level using standard hosting and domain services. Most of these websites are specifically created to distribute adult face-swapped videos of Kpop idols.

In 2022, a cyber monitoring firm, Criminal IP, analyzed deepfake websites targeting Korean celebrities and singers. The reports stated that a total of 190 categories named after Kpop idols and singers were posted on a single website. Each category had multiple deepfake videos of Kpop idols, such as Suzu from Miss A, Lisa from BLACKPINK, Nancy from Momoland, Karina from Aespa, and many more.

Criminals hide their IP using Cloudflare

While analyzing more deepfake websites, specifically those targeting Kpop idols, Criminal IP discovered another website using Cloudflare services to hide its original IP address. Upon reverse-engineering the website IP address, the researchers found that the IP address used was mapped out as two different IPs (172.67.210.176 and 104.21.69.174).

Both were found to be Cloudfare servers and were not directly related to the owners’ servers. Since Cloudflare can conceal IP addresses for websites to protect them against hacking attempts and cyber attacks like DDoS, the website owners were using Cloudfare to avoid IP tracking, making this website live and running till now.

So the concern for fake videos of Kpop idols is still ON. And this is a long fight that Kpop companies, Idols, cybersecurity firms, and fans are fighting.